Straggler Mitigation

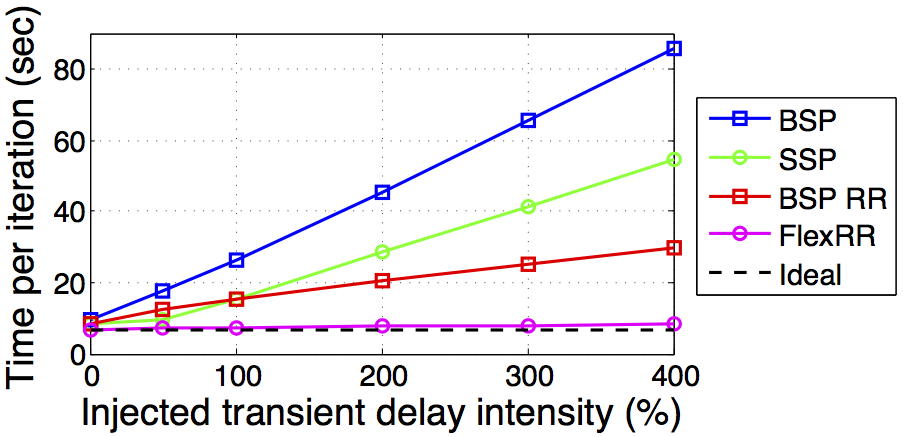

Distributed executions of iterative machine learning (ML) algorithms can suffer significant performance losses due to stragglers. The regular (e.g., per iteration) barriers used in the traditional BSP approach cause every transient slowdown of any worker thread to delay all others. This project describes a scalable, efficient solution to the straggler problem for this important class of ML algorithms, combining a more flexible synchronization model with dynamic peer-to-peer re-assignment of work among workers. Experiments with real straggler behavior observed on Amazon EC2, as well as injected straggler behavior stress tests, confirm the significance of the problem and the effectiveness of the solution, as implemented in a framework called FlexRR. Using FlexRR, we consistently observe near-ideal run-times (relative to no performance jitter) across all real and injected straggler behaviors tested.

|

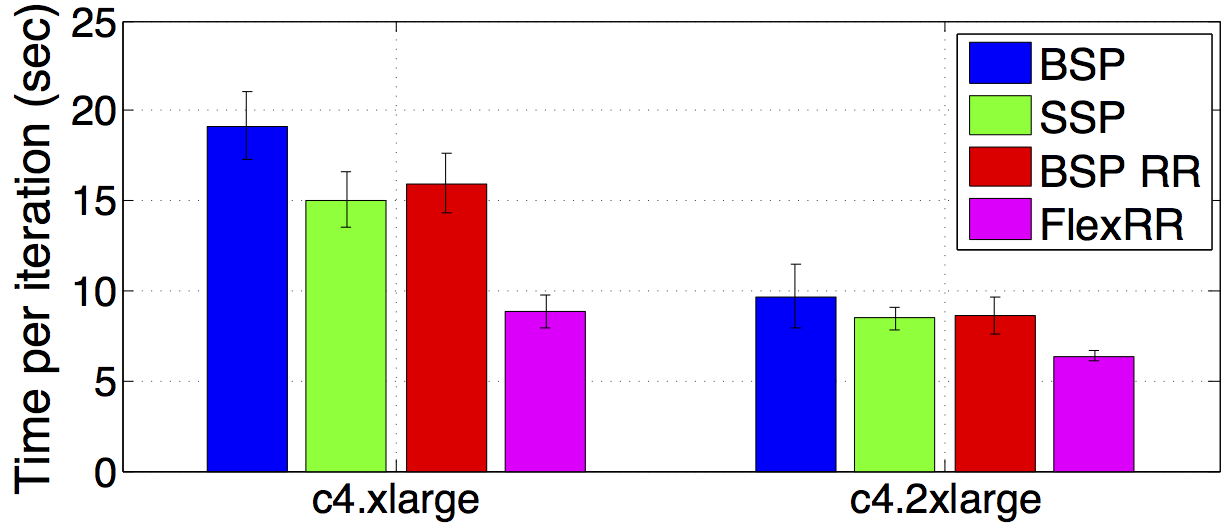

| Matrix Factorization Running on two classes of AWS EC2 Machines |

|

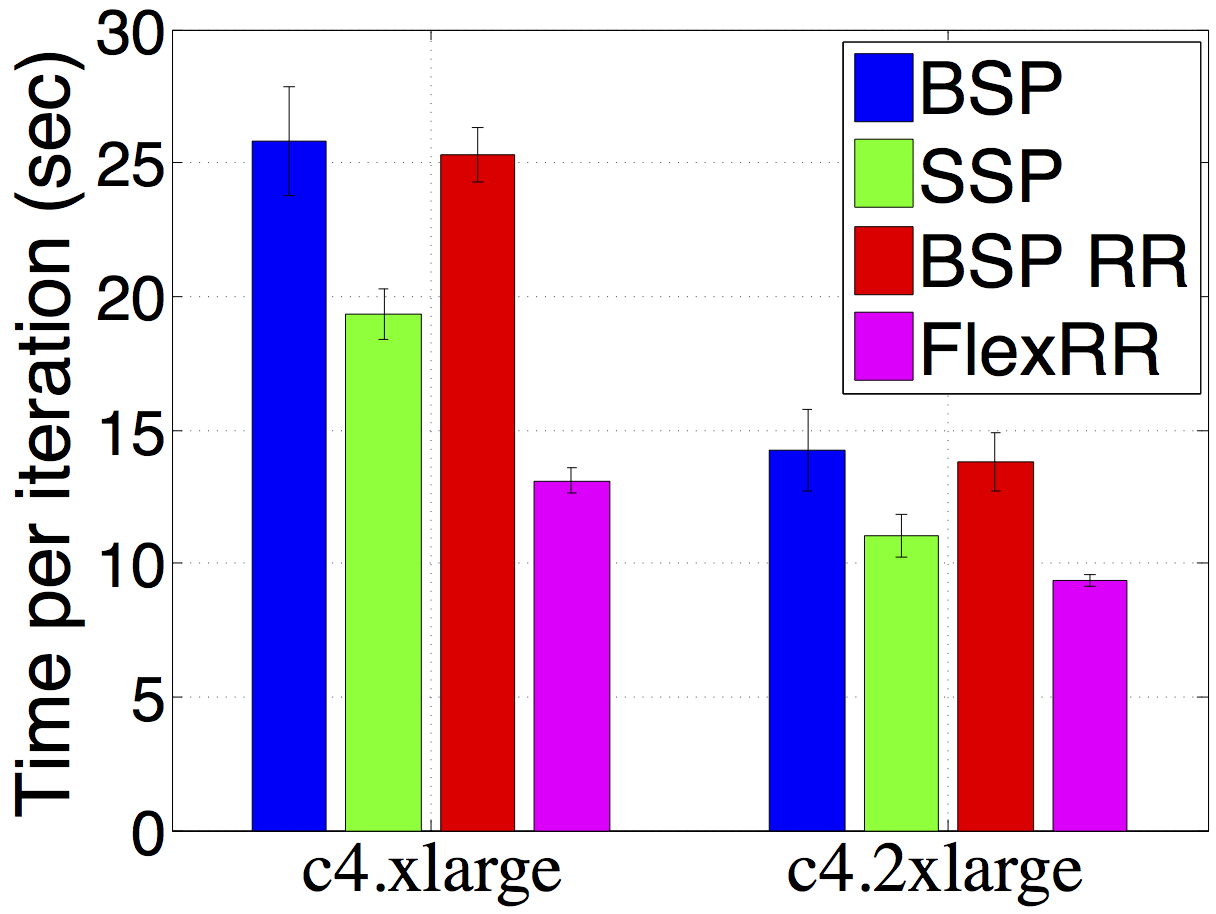

| LDA Running on two classes of AWS EC2 Machines |

|

| FlexRR resolving emulated straggler patterns |

People

FACULTY

Greg Ganger

Phil Gibbons

Garth Gibson

Eric Xing

GRAD STUDENTS

Aaron Harlap

Henggang Cui

Publications

- Solving the Straggler Problem for Iterative Convergent Parallel ML

Aaron Harlap, Henggang Cui, Wei Dai, Jinliang Wei Gregory R. Ganger, Phillip B. Gibbons, Garth A. Gibson, Eric P. Xing. Carnegie Mellon University Parallel Data Laboratory Technical Report CMU-PDL-15-102, April 2015.

Abstract / PDF [532KB]

Acknowledgements

We thank the members and companies of the PDL Consortium: Bloomberg LP, Datadog, Google, Intel Corporation, Jane Street, LayerZero Labs, Meta, Microsoft Research, Oracle Corporation, Oracle Cloud Infrastructure, Pure Storage, Salesforce, Samsung Semiconductor Inc., Uber, and Western Digital for their interest, insights, feedback, and support.