File System Virtual Appliances

Building and maintaining third-party file systems (FSs) is painful. Of course, OS functionality is notoriously difficult to develop and debug, and FSs are more so than most because of their size and interactions with other OS components (e.g., the virtual memory system). But, for third-party FSs, which are FSs not explicitly maintained by the OS implementers as a core part of the OS, there is a rarely-appreciated challenge: dealing with changes from one OS version to the next.

One would like to believe that the virtual file system (VFS) layer present in most OSs insulates the FS implementation from the rest of the kernel, but the reality is far from this ideal. Instead, even when the VFS interfaces remain constant, internal FS compatibility rarely exists between one kernel version and the next. Changes in syntax, locking semantics, memory management, and preemption practices create differences that require version-specific code in the FS implementation. For “native” FSs supported by the kernel implementers (e.g., ext2 and NFS in Linux), appropriate corrections are made in the FS as a part of the new kernel version. For third-party FSs, however, they are not. As each new version is released, whether as a patch or a complete replacement, the third-party FS maintainers must figure out what changed, modify their code accordingly, and provide the new FS version. Because users of the third-party FS may be using any of the previously supported OS versions, all must be maintained and the code becomes riddled with version-specific “#ifdef”s, making it increasingly difficult to understand and modify correctly.

The pain and effort involved with third-party FSs create a large barrier for those seeking to innovate, and wear on those who choose to do so. Most researchers sidestep these issues by prototyping in just one OS version. Many also avoid kernel programming by using user-level FS implementations, via NFS-over-loopback or a mechanism like FUSE, and some argue that such an approach sidesteps version compatibility issues. But, it really doesn’t.

First, performance and semantic limitations prevent most production FSs from relying on user-level approaches. Second, and more fundamentally, user-level approaches do not insulate an FS from application-level differences among OS distributions (e.g., shared library availability and file locations) or from kernel-level issues (e.g., handling of memory pressure). So, third-party FS developers address the problem with brute force.

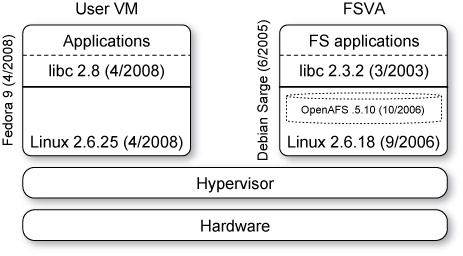

This research promotes a new approach (Figure 1) for third-party FSs, leveraging virtual machines to decouple the OS version in which the FS runs from the OS version used by the user’s applications. The third-party FS is distributed as a file system virtual appliance (FSVA), a pre-packaged virtual machine (VM) loaded with the FS. The FSVA runs the FS developers’ preferred OS version, with which they have performed extensive testing and tuning. The user(s) run their applications in a separate VM, using their preferred OS version. Because it runs in a distinct VM, the third-party FS can be used by users who choose OS versions to which it is never ported.

For this FSVA approach to work, an FS-agnostic proxy must be a “native” part of the OS—it must be maintained across versions by the OS implementers. The hope is that, because of its small size and value to a broad range of third-party FS implementers, the OS implementers would be willing to adopt such a proxy. The integration and maintenance of FUSE, a kernel proxy for user-level FS implementations, in Linux, NetBSD, and OpenSolaris bolster this hope.

The paper "File System Virtual Appliances: Third-party File System Implementations without the Pain" (link below) details the design and implementation of FSVA support in Linux, using Xen as the VM platform. The Xen communication primitives allow for reasonable performance, relative to a native in-kernel FS implementation—for example, an OpenSSH build runs within 15% of the native performance. Careful design is needed, however, to ensure FS semantics, maintain OS features like a unified buffer cache, minimize OS changes in support of the proxy, and avoid loss of virtualization features such as isolation, resource accounting, and migration. Our prototype system realizes all of these design goals.

Figure 1. A file system runs in a VM provided by the third-party FS vendor. A user

continues to run their preferred OS environment (including kernel version, default distribution, and library versions). By decoupling the user and FS OSs, one allows users to use any OS environment without needing a corresponding FS port from the FS vendor.

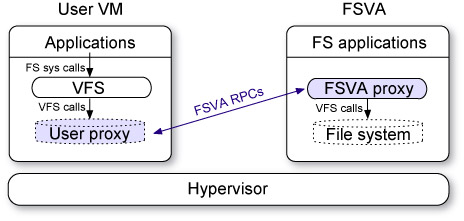

Figure 2 illustrates the FSVA architecture. User applications run in a user’s preferred OS environment (i.e., OS distribution and kernel version). An FS implementation executes in a VM running the FS vendor’s preferred OS environment. In the user OS, an FS-independent proxy registers as an FS with the VFS layer. The user proxy transports all VFS calls to a proxy in the FSVA that sends the VFS calls to the actual FS implementation. The two proxies perform translation to/from a common VFS interface and cooperate to maintain OS and VM features such as a unified buffer cache (§4.4) and migration (§4.6), respectively.

Using an FSVA, a third-party FS developer can tune and debug their implementation to a single OS version without concern for the user’s particular OS version. The FS will be insulated from both user-space and in-kernel differences in user OS versions, because it interacts with just the one FSVA OS version. Even issues like the poor handling of memory pressure in RHEL kernels can be addressed by simply not using such a kernel in the FSVA—the FS implementer can choose an OS version that does not have ill-chosen policies, rather than being forced to work with them because of a user’s OS choice.

Figure 2. FSVA architecture. An FS and its (optional) management applications run in a

dedicated VM. A FS-agnostic proxy running in the client OS and FSVA pass VFS calls via an

efficient RPC transport.

The efficacy of the FSVA architecture is demonstrated with a number of case studies. Three real file systems (OpenAFS, ext2, and NFS) are transparently provided, via an FSVA, to applications running on a different VM, which can be running a different OS version (e.g., Linux kernel versions 2.6.18 vs. 2.6.25). No changes were required to the FS implementation in the FSVA. In contrast, analysis of the change logs for these file systems shows that significant developer effort was required to make them compatible, in the traditional approach. To further illustrate the extensibility enabled by the FSVA architecture, we demonstrate an FS specialization targeted for VM environments: intra-machine cooperative caching eliminates redundant I/O from district VMs that share files in a distributed FS. This extension can be supported in an FSVA with no modification to the user OS. Note that, with the FSVA architecture, resources previously applied to version compatibility can instead go toward such feature addition and tuning

People

FACULTY

Greg Ganger

Garth Gibson

Michael Reiter

GRAD STUDENTS

Michael Abd-El-Malek

James Cipar

Elie Krevat

Matthew Wachs

Publications

- File System Virtual Appliances: Portable File System Implementations. Michael Abd-El-Malek , Matthew Wachs, James Cipar, Karan Sanghi, Gregory R. Ganger, Garth A. Gibson, Michael K. Reiter. ACM Transactions on Storage, Vol. 8, No. 3, Article 39, May 2012.

Abstract / PDF [518K]

- File System Virtual Appliances: Portable File System Implementations. Michael Abd-El-Malek, Matthew Wachs, James Cipar, Karan Sanghi, Gregory R. Ganger, Garth A. Gibson, Michael K. Reiter. Carnegie Mellon University Parallel Data Lab Technical Report CMU-PDL-10-105, April 2010.

Abstract / PDF [513K]

- File System Virtual Appliances. Michael Abd-El-Malek. Ph.D. Dissertation. Carnegie Mellon University Parallel Data Lab Technical Report CMU-PDL-09-109, August 2009.

Abstract / PDF [1.15M]

- File System Virtual Appliances: Portable File System Implementations.

Michael Abd-El-Malek, Matthew Wachs, James Cipar, Karan Sanghi, Gregory R. Ganger, Garth A. Gibson, Michael K. Reiter. Carnegie Mellon University Parallel Data Lab Technical Report CMU-PDL-09-102. May 2009.

Abstract / PDF [486K]

- File System Virtual Appliances: Third-party File System Implementations without the Pain. Michael Abd-El-Malek, Matthew Wachs, James Cipar, Gregory R. Ganger, Garth A. Gibson, Michael K. Reiter. Carnegie Mellon University Parallel Data Lab Technical Report CMU-PDL-08-106, May 2008.

Abstract / PDF [508K]

Acknowledgements

This material is based on research sponsored in part by the National Science Foundation, via grants CNS-0326453 and CCF-0621499, by the Department of Energy, under Award Number DE-FC02-06ER25767, and by the Army Research Office, under agreement number DAAD19–02–1–0389.

We thank the members and companies of the PDL Consortium: Amazon, Google, Hitachi Ltd., Honda, Intel Corporation, IBM, Jane Street, Meta, Microsoft Research, Oracle Corporation, Pure Storage, Salesforce, Samsung Semiconductor Inc., Two Sigma, and Western Digital for their interest, insights, feedback, and support.