Expressive Storage Interfaces

|

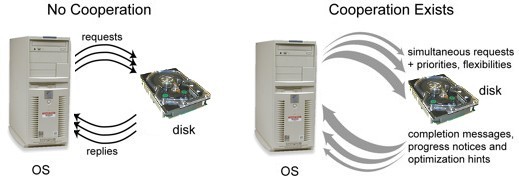

Both

systems allow the host OS to make simultaneous requests to the

disk, but cooperative interfaces also allow the host to tell

the disk what is important and what options are acceptable,

allowing the disk to specialize actions to host needs. Cooperative

interfaces also allow the disk to tell the host OS about data

layout and access patterns. |

The goal of the ESI projects is to increase the cooperation between device firmware and OS software to significantly increase the end-to-end performance and system robustness. The fundamental problem is that the storage interface hides details from both sides and prevents communication. This could be avoided by allowing the host software and device firmware to exchange information. The host software knows the relative importance of requests and has some ability to manipulate the locations that are accessed. The device firmware knows what the device hardware is capable of in general and what would be most efficient at any given point. Thus, the host software knows what is important and the device firmware knows what is fast. By exploring new storage interfaces and algorithms for exchanging and exploiting the collection of knowledge, and developing cooperation between devices and applications, we hope to eliminate redundant, guess-based optimization. The result would be storage systems that are simpler, faster, and more manageable.

Examples of ongoing projects that hope to achieve these goals include

improving host software with Track-aligned

Extents (traxtents) and improving disk firmware with Freeblock

Scheduling.

More Information

- Extended Overview

- White Paper: Blurring

the Line Between Oses and Storage Devices. Ganger.

CMU-CS-01-166, December 2001.

People

FACULTY

STUDENTS

Garth Goodson

John Griffin

Chris Lumb

Mike Mesnier

Brandon Salmon

Jiri Schindler

Eno Thereska

Publications

- On Multidimensional Data and Modern Disks. Steven

W. Schlosser, Jiri Schindler, Stratos Papadomanolakis , Minglong Shao

Anastassia Ailamaki, Christos Faloutsos, Gregory R. Ganger. Proceedings

of the 4th USENIX Conference on File and Storage Technology (FAST

'05). San Francisco, CA. December 13-16, 2005.

Abstract / PDF [220K]

- MultiMap: Preserving disk locality for multidimensional datasets. Minglong Shao, Steven W. Schlosser, Stratos Papadomanolakis, Jiri

Schindler, Anastassia Ailamaki, Christos Faloutsos, and Gregory R.

Ganger. Technical Report CMU-PDL-05-102. Carnegie-Mellon University,

April 2005.

Abstract / PDF [318K]

- DSPTF: Decentralized Request Distribution in Brickbased Storage Systems. Christopher R. Lumb, Richard Golding, Gregory R. Ganger. Proceedings of ASPLOS’04, October 7–13 ,2004, Boston, Massachusetts, USA.

Abstract / PDF [281K]

- Atropos: A Disk Array Volume Manager for Orchestrated Use of Disks. Jiri Schindler, Steven W. Schlosser, Minglong Shao, Anastassia Ailamaki, Gregory R. Ganger. Proceedings of the 3rd USENIX Conference on File and Storage Technologies (FAST '04). San Francisco, CA. March 31, 2004. Supercedes Carnegie Mellon University Parallel Data Lab Technical Report CMU-PDL-03-101, December, 2003.

Abstract / PDF [281K]

- MEMS-based storage devices and standard disk interfaces: A square peg in a round hole? Steven W. Schlosser, Gregory R. Ganger. Proceedings of the 3rd USENIX Conference on File and Storage Technologies (FAST '04). San Francisco, CA. March 31, 2004. Supercedes Carnegie Mellon University Parallel Data Lab Technical Report CMU-PDL-03-102, December, 2003.

Abstract / Postscript [2.8M] / PDF [156K]

- D-SPTF: Decentralized Request Distribution in Brick-based Storage.

Christopher R. Lumb, Gregory R. Ganger, Richard Golding. Carnegie

Mellon University School of Computer Science Tecnical Report CMU-CS-03-202,

November, 2003.

Abstract / PDF [475K]

- Lachesis: Robust Database Storage Management Based on Device-specific

Performance Characteristics. Jiri Schindler, Anastassia Ailamaki,

Gregory R. Ganger. VLDB 03, Berlin, Germany, Sept 9-12, 2003. Also

available as Carnegie Mellon University Technical Report CMU-CS-03-124,

April 2003.

Abstract / Postscript [510K] / PDF [152K]

- Exposing and Exploiting Internal Parallelism in MEMS-based Storage.

Steven W. Schlosser, Jiri Schindler, Anastassia Ailamaki, Gregory

R. Ganger. Carnegie Mellon University Technical Report CMU-CS-03-125,

March 2003.

Abstract / Postscript [1.67M] / PDF [136K]

- Analysis of Methods for Scheduling Low Priority Disk Drive Tasks.

Jiri Schindler, Eitan Bachmat. Proceedings of SIGMETRICS 2002 Conference,

June 15-19, 2002, Marina Del Rey, California.

Abstract / Postscript [237K] / PDF [132K]

- Track-aligned Extents: Matching Access Patterns to Disk Drive

Characteristics. Jiri Schindler, John Linwood Griffin, Christopher

R. Lumb, Gregory R. Ganger. Conference on File and Storage Technologies

(FAST), January 28-30, 2002. Monterey, CA. Also available as CMU SCS

Technical Report CMU-CS-01-119.

Abstract / Postscript [682K] / PDF [159K]

- Freeblock Scheduling Outside of Disk Firmware. Christopher

R. Lumb, Jiri Schindler, Gregory R. Ganger. Conference on File and

Storage Technologies (FAST), January 28-30, 2002. Monterey, CA. Also

available as CMU SCS Technical Report CMU-CS-01-149.

Abstract / Postscript [643K] / PDF [150K]

- Blurring the Line Between Oses and Storage Devices. Gregory

R. Ganger. CMU SCS Technical Report CMU-CS-01-166, December 2001.

Abstract / Postscript [2.3M] / PDF [974K]

- Towards Higher Disk Head Utilization: Extracting "Free" Bandwidth

From Busy Disk Drives. Lumb, C., Schindler, J., Ganger, G.R.,

Nagle, D.F. and Riedel, E. Appears in Proc. of the 4th Symposium on

Operating Systems Design and Implementation, 2000. Also published

as CMU SCS Technical Report CMU-CS-00-130, May 2000.

Abstract / Postscript [2.3M] / PDF [422K]

- Automated Disk Drive Characterization. Schindler, J. and

Ganger, G.R. CMU SCS Technical Report CMU-CS-99-176, December 1999.

Abstract / Postscript [341K] / PDF [282K]

Acknowledgements

This material is based upon work supported by the National Science Foundation under Grant No. 0113660. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the National Science Foundation.

We thank the members and companies of the PDL Consortium: Amazon, Google, Hitachi Ltd., Honda, Intel Corporation, IBM, Meta, Microsoft Research, Oracle Corporation, Pure Storage, Salesforce, Samsung Semiconductor Inc., Two Sigma, and Western Digital for their interest, insights, feedback, and support.