Move

OVERVIEW/BASICS: The DCO is located in the Collaborative Innovation Center (CIC) on the Carnegie Mellon University campus.

The DCO is housed in a highly visible showcase in a main hallway on the main floor of CIC building. Equipment is visible through a windowed wall which also contains a monitor displaying live stats.

The first zone, housing 325 computers, including blade servers, computing nodes, and storage servers, came online in April of 2006.

The second zone of the DCO, on the right, came online in October 2008. Each zone is a self-contained collection of hardware, power and cooling.

SPECIFICATIONS: The DCO will be populated in four phases/zones. The first zone came online in March, 2006. The second zone came online in October of 2008.

The DCO is an extraordinarily dense computing environment. It is 1,825 sq ft in size, plus adjacent administrator space, and is designed to handle an ultimate computer load of 774 kW, (424 W/sq. ft.) Another way to think about this is that the average home draws 1kW of electrical power. So, our 1,825 sq. ft. facility (the size of an avg. home) draws about the same wattage as 750 homes. This draws attention to both the importance of energy management in large-scale computing systems and also the ability to build a densely populated installation.

The DCO will ultimately contain 40 racks of computers, or about 1,000 machines and multi-petabytes of of storage. Each compute/storage/networking rack is budgeted to accommodate about 2000 lbs per rack drawing 25 kW. In the event of a catastrophic loss of power or chilled water, a controlled/automated shutdown takes place. The time allotted for this is four minutes, limited by battery and chilled water effectiveness.

DESIGN & CONSTRUCTION: The data center is partitioned into zones to bring the cooling as close to the load as possible. Each zone consists of one self-contained heat enclosed space.This interior view of zone 1 shows that the ‘hot aisle’ portion of a zone is fully accessible, providing administrators with access to the rear of each rack.

High Density Enclosure (HDE) & Cooling: Each zone consists of an enclosure containing 12 racks. The heat produced by the machines running in the zone is contained within this High Density Enclosure (HDE) and is removed by in-row A/C units. Each self-contained zone is temperature neutral with the rest of the room. Exterior room air is 72 degrees F and interior (hot aisle) temperature can be up to 90 degrees F. Here is a view into the front of one of the newer in-row AC units, showing the top blower in the unit.

This is a view of the back of the in-row AC units, with filters visible behind the doors. Temperature and humidity probes are visible at the top left corner of the unit.

A view of the front of one of the in-row AC units.

DCO chilled water loop schematic. This is an abbreviated schematic, showing how the cooling system moves the heat produced by the systems to the outside. It does not show actual lines running to Phase 2 of the DCO.

The DCO shares its chilled water system with other campus facilities (it doesn't have a dedicated chiller plant). The observation team is interested in measuring the effects of power density and the accompanying heat on the data center physical infrastructure and to find ways to limit the negative impacts of a dense data center environment.

Water flow rates and temperatures as well as the status of the pumps are monitored. Redundant pumps run the local chilled water loop which is connected to the university’s main chilled water loop with redundant heat exchangers.

This is the water pump control panel. In the event of a catastrophic loss of power or chilled water, the electrical and cooling systems were designed to operate for four minutes. If a catastrophic failure is detected, an automated system is engaged to perform a controlled shutdown within this time window.

To ensure that enough chilled water can be circulated in the DCO’s private loop, in case of chilled water failure, some of the pipes in the loop are upsized to provide enough volume in the loop so that water can be circulated at full room load for four minutes and still maintain cooling.

The entry point of chilled water supply to DCO shown during the construction phase.

A close up view of the back of one of the AC units in Zone 1, with CMU developed sensor boards and their associated battery packs visible on unit.

Another construction phase photo -- water lines running through DCO.

The Leak Detection System: Using the leak detection map, administrators can close in on the affected location and investigate. Alerts to leak and cable problems are tied in with the APC environmental sensing equipment, providing remote monitoring capability.

The leak detection system monitors the underfloor space in the DCO for water leakage. A leak will cause the monitoring system to raise an alarm and display a distance measurement. The orange wires are the leak monitoring wires and the black wires are the ground system for the floor.

The monitoring console for the leak detection system.

A photo of the second transformer for the CIC. The majority of the power from this unit, as well as from its companion, goes towards to the DCO. Grounding bars go around the base of the walls of the room.

This view shows the cables from the transformer going into conduits leading to the building switch room. Each conduit is tied into the grounding bus.

Power: The DCO receives power from two independent feeds that are connected to the CIC through a transfer switch. If the building experiences a problem on one feed, the transfer switch will automatically engage the other. The data center itself has no access to a backup generator; however, the chilled water pumps are connected to a backup generator.

Power panel interior: Power is distributed through power distribution units and their associated UPS racks. If a rack mounted power panel goes out, redundant power supplies provide reserve power for a safe shutdown.

A view of the Power Distribution Units (PDUs) in a rack in Zone 2. Two units are necessary to account for increased power density requirements.

All machines have a remote serial console and are powered through the power distrubution units (PDUs). This allows remote access through the network to the system console, and permits the power to each machine to be individually controlled. This photo shows the APC Environmental Monitoring Unit (EMU), with a console cable leading into the rack to the remote console unit.

Interior convenience outlets may be used to power console carts, etc.

Next to both entrances to the DCO, there is an Emergency Power Off switch, along with a phone and fire alarm pull.

Fire Suppression: Local code required a water-based fire control system, so there was no need to spend money on a gas system. Ultimately, the design went with a double-interlock, pre-action dry pipe system. For the system to engage, at least two of three events need to occur—an alarm handle pulled, a sprinkler head opened and/or a smoke detector alarmed—before water can enter the pipe.

Disabling the fire suppression system will notify the management office.

A view of the top of Zone 1, with cables and fire suppression clearly visible.

The entire building has an 18” raised floor. We have a raised floor to maintain a grade level entrance. The under floor space is used for main electrical feeds (480v) from the panels to the PDUs (for down conversion to 208v), the leak detection system, chilled water piping, and building air plenum. The under-floor space is not used for cooling of the computing equipment, which is handled by the A/C units integrated into each zone.

Building code requires a certain number of fresh air changes in the room per day, based upon human occupancy. This is accomplished with building air entering through four perforated tiles (one per corner) and a single return (overhead, near the back door).

Security: Access to the room is limited to key personnel and controlled via the grey system, a security system developed by Cylab researchers. ( http://www.ece.cmu.edu/~grey/). A computer and phone-based system is connected to all doors that provide a data center access point. Each staff member can remotely enter an authorization code via the cell phone to open a door. Administrators can also selectively authorize entrance to other grey enabled personnel not on the master list of pre-approved staff.

EQUIPMENT & NETWORKING: The racks in Zone 1 were populated over a period of approximately 18 months beginning in April, 2006. Equipment is a heterogeneous mix of computers of different sizes (1u, 3u, blade) and manufacturers (Dell, HP, IBM, NetApp, Sun and generic). The 1u racks require secondary power distribution units to provide enough outlets to the computers. Gigabit Ethernet is employed throughout, though Infiniband has been deployed on some nodes for experimentation and 10 Gbps is coming. Racks generally have full bisection bandwidth, with four lines trunked back to a central switch, so there is less bandwidth between racks. An exception is the one rack that contains infiniband.

Six fiber lines have been pulled into the room, but only two lines are currently employed (one of these is for redundancy). Applications are generally fully serviced within the room (both compute and storage), so a lot of bandwidth in/out of the room is not needed. This photo provides a view of the external network feeds, with the wall box containing connection points to in-wall cabling to the ECE routers.

Wires are color coded by function. Each wire is individually labeled on both ends, to identify source, destination and purpose. All machines and wires are cataloged in a database. This database drives shutdown scripts and other functions.

Wiring is neatly wrapped and routed in the overhead trays. This is a view of the cables on the top of Zone 1 in the cable runs, along with power cables in a separate cable run. The top of an AC unit is visible about 2/3rds of the way down the row, and the ladder carrying the fiber optic cables for the external network feeds in the near foreground.

A view of the back of the network/infrastructure rack and a rack of 1U servers. Color coding of the cables helps administrators keep track of cable function.

A close up view of the back of a rack with its associated color coded cabling. Labels identifying cables are visible on the ends of each cable. The network switches are visible at the top, with the rack level PDUs on the left, and the remote console unit on the right.

New hardware is uncrated in an adjoining lab. This keeps debris out of the DCO. A server lift is used to transport machines from the lab into the DCO.

A picture of the server lift, along with a floor tile puller mounted on the wall.

Consumables are kept sorted and on hand (parts cart and cable bins). This shows the cart holding tools, screws, velcro, zip ties and other things used for cable and server installation.

A view of the parts cart, as well as bins holding cables of varying lengths and colors.

MONITORING: The DCO is deeply instrumented. Events are monitored via Nagios, ( http://www.nagios.org/) a host and service monitor designed to inform administrators of network problems. We employ redundant Nagios servers in separate buildings to watch over each other. All data is collected and viewed via snm.

A picture of our NetBotz monitoring unit, with an attached camera, which can make pictures available to the web. Power cords between the main PDUs and the racks can be seen behind the unit.

Monitored physical sensors include:

• per-machine and aggregate power usage

• air temperature and chilled water flow (all paneled meters)

• fan speeds, humidity, battery levels, leaks, etc.

• Out of range conditions trigger a page sent to the administrator.

This photo shows the power tap for the ION meter.

A view of the switch panel currently supplying power to Zone 1, with the power meter display (dco-ion1) at the top.

A close up view of dco-ion1, showing a snapshot of figures on power demand for Zone 1.

Another view of dco-ion1 showing additional power usage stats (volts, amps, PF)

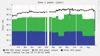

Power usage by Zone 1 over a year.

A view of the back of two DCO racks. An APC environmental sensor, which monitors hot aisle temperature and humidity, is visible in the middle of the frame.

One of the APC environmental sensors on the front door of a rack in Zone 1. This rack has a single probe in the middle of the front door.

Another rack, with two environmental sensors mounted on the front door.

Computer activity monitoring includes I/O, CPU/machine stats, disk stats, networking stats, etc. This image of compiled data shows emphasis on machines participating in significant network traffic. The larger the dot and the wider the line, the more traffic a node is exchanging.

Operational records are maintained:

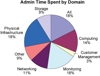

• administrator tasks and times

• component, system, application failures

• per-customer resource utilization

|