DISC-Holes:

Data Models for Black-hole Datasets from Cosmological Simulations

We have developed algorithms and tools for storing and indexing black hole datasets produced by large-scale cosmological simulations. These tools are being used by astrophysicists at the McWilliams Center for Cosmology at Carnegie Mellon to analyze black hole datasets from the largest published simulations.

PROBLEM

Large-scale cosmological simulations play an important

role in advancing our understanding of the formation and evolution of the universe. These computations require a large number of particles, in the order of 10-100 billions, to realistically model phenomena such as the formation of galaxies. Among these particles, black holes play a dominant role in structure formation. Black holes grow by accreting gas from their surrounding environments or by merging with nearby black holes. Cosmologists are interested in the analysis of black hole properties throughout the simulation with high temporal resolution in order to understand how supermassive black holes become that large. To model the growth of these black holes, the properties of all the black holes that merged need to be assembled in merger tree histories. In the past these analyses have been carried out with custom approaches that can no longer handle the size of black hole datasets produced by state-of-the-art cosmological simulations.

RESULTS

We have developed a set of techniques, which leverage relational database management systems, to store and query a forest of black hole merger trees and their histories. We have tested this approach on datasets containing 0.5 billion history records for over 3 million black holes and 1 million merger events. This approach can support interactive analysis and enables flexible exploration of black hole databases.

|

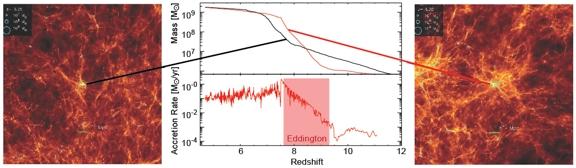

| Sample black holes extracted from a 65 billion particle, hydrodynamics Lambda-Cold Dark Matter (ΛCDM) simulation. This figure shows the gas distribution around two of the largest black holes in a snapshot from a recent simulation. The respective light curves for these black holes are shown in the plot, as well as the accretion rate history for the most massive one. |

More details: Summary of

algorithms and empirical results

CHALLENGES

As cosmology simulations grow in size, so do the datasets they produce, including the detailed black hole history datasets. We are investigating new techniques to address the challenge of scaling to much larger data sizes. We are developing a new generation of tools that will leverage distributed scalable structured storage systems such as HBase and Cassandra.

PEOPLE

FACULTY

Eugene Fink

Garth Gibson

Julio López

GRADUATE STUDENTS

Colin Degraf

Bin Fu

EXTERNAL COLLABORATORS

Tiziana Di Matteo (Physics, Carnegie Mellon University)

Rupert Croft (Physics, Carnegie Mellon University)

PUBLICATIONS

- Recipes for Baking Black Forest Databases: Building and Querying Black Hole Merger Trees from Cosmological Simulations. Julio Lopez, Colin Degraf, Tiziana DiMatteo, Bin Fu, Eugene Fink, and Garth Gibson. Proceedings of the Twenty-Third Scientific and Statistical Database Management Conference (SSDBM 2011), 20-22 July 2011.

Abstract / PDF [5.5M]

- Recipes for Baking Black Forest Databases: Building and Querying Black Hole Merger Trees from Cosmological Simulations. Carnegie Mellon University Parallel Data Lab Technical Report CMU-PDL-11-104. April 2011.

Abstract / PDF [6.5M]